Smaller batches and Dropout

Batch size of 256 is too large

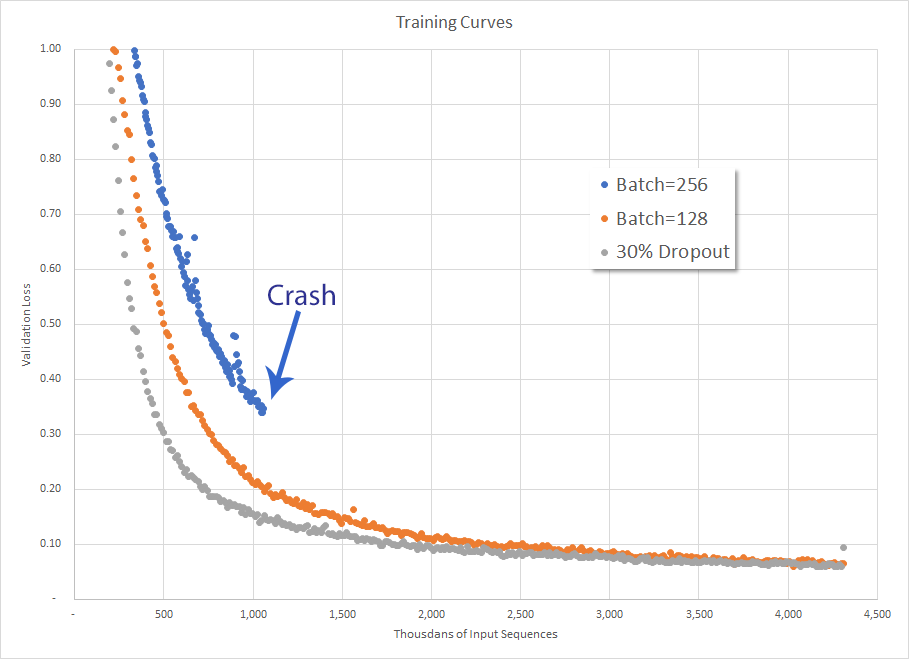

I used a batch size of 256 during my first crack at training the RNN and, as can be seen below, it crashed before completing the first epoch. I’m not sure how long it took to get to that point, but it was probably only a couple hours in.

Adding Dropout

Not only did dropping the batch size down to 128 improve training performance (the validation loss came down more quickly), but the RNN was able to successfully train for 6 hours on my local machine. That being said, it’s generally a good idea to use some form of Regularization to prevent over-fitting. The Udacity project didn’t have any Regularization, so I added 30% Dropout. This had the happy effect of initially increasing the rate at which the validation loss dropped, but at the end of the epoch it was still about the same without dropout.

The spelling correction is also not working particularly well. As an example, “he had dated forI much of the past” becomes “Sou had teap for much to heads tap”. There’s obviously more work to be done.

Google Scholar

Google Scholar

Leave a Comment