Goldilocks batch size

Batch size of 64 is too small

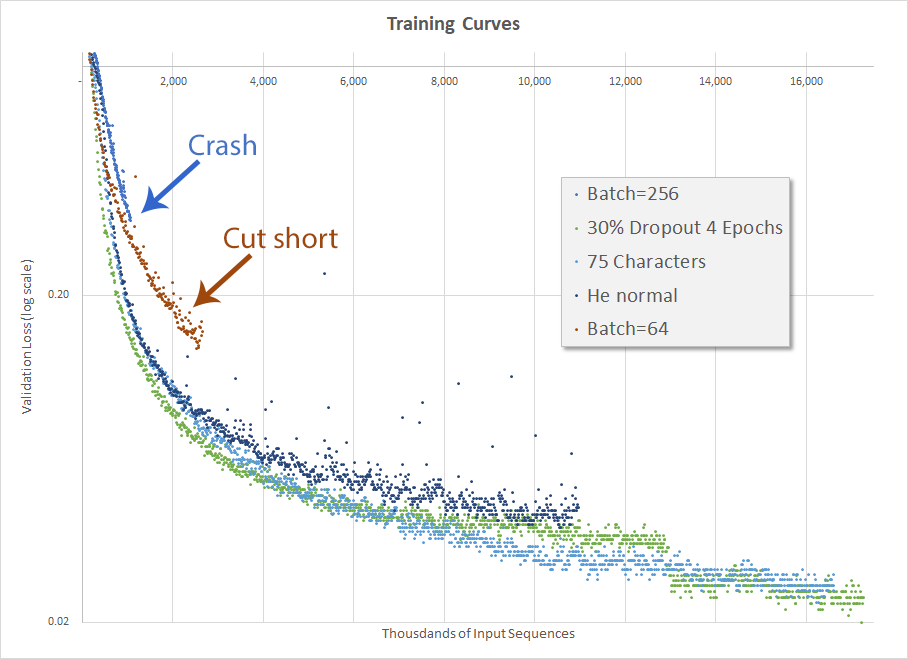

As we learned in a previous post, a batch size of 256 is too large. Since then I’ve been running with a batch size of 128 when I got to thinking, if 128 is better than 256 then perhaps 64 is even better than that.

It is not.

For whatever reason, training with a batch size of 64 progressed significantly slower than all of the training with a batch size of 128. It was so poor that I cut it off before even completing the first epoch. I’ll be going back to 128 now because, apparently, that one is “just right.”

Google Scholar

Google Scholar

Leave a Comment